Read time: 4 minutes

Training Machine Learning models inside notebooks is just one step to building real-world ML services. And as exciting as it is, it brings no business value unless you deploy and operationalize the model.

In this article, you will learn how to transform an all-in-one Jupyter notebook with data preparation and ML model training, into a fully working batch-scoring system, using the 3-pipeline design.

Let’s get started!

One Juper notebook as a starting point

The starting point is this one Jupyter notebook where you:

Loaded data from a CSV file

Engineered features and targets

Trained and validated an ML model.

Generated predictions on the test set.

These are the notebooks you find on websites like Kaggle, for example.

Let's now turn this notebook into a batch-prediction service.

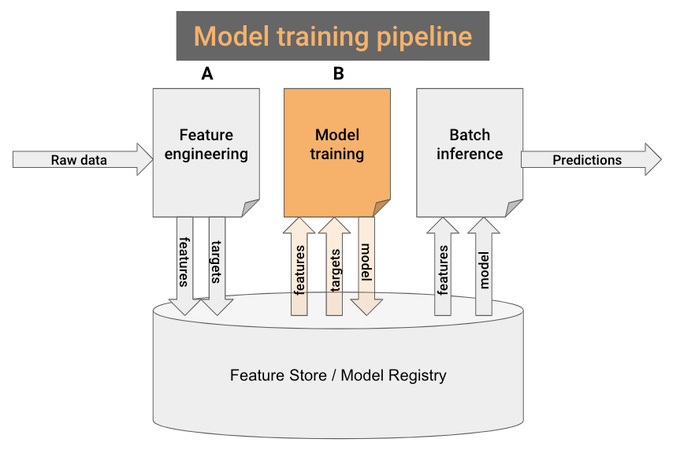

The 3-pipeline architecture

A batch-prediction service ingests raw data and outputs model predictions on a schedule (e.g. every 1 hour).

You can build one using this 3-pipeline architecture:

Feature pipeline 📘

Training pipeline 📙

Batch inference pipeline 📒

Step 1. Feature pipeline

Break your initial all-in-one notebook into 2 smaller ones:

📘 Notebook A, reads raw data and generates features and targets.

📙 Notebook B, takes in the features and targets and outputs a trained model.

Notebook A is your feature pipeline. Let's put it now to work.

You need 2 things:

Automation. You want the feature pipeline to run every hour. You can do this with a GitHub action.

Persistence. You need a place to store the features generated by the script. For that, use a managed Feature Store.

Step 2. Model training pipeline

Remember the 2 sub-notebooks (📘 A and 📙 B) you created in step #1?

Notebook B 📙 is your model training pipeline.

Well, almost. You only need to change 2 things...

Read the features from the Feature Store, and not CSV files.

Save the trained model (e.g. pickle) in the model registry, not locally on disk, so the batch-inference pipeline can later use it to generate predictions.

Step 3. Batch-inference pipeline

Build a new notebook that:

Loads the model from the model registry

Loads the most recent feature batch.

Generates model predictions and saves them somewhere (e.g. S3, database...) where downstream services can use them.

Finally, you create another GitHub action to run the batch-inference pipeline on a schedule.

Boom.

My advice 💡

The job market for data scientists is getting crowded, and finding a job is becoming more challenging. If you wanna stay on top, you need to expand your domain of expertise.

So, if you like ML, the next obvious step is to enter into MLOps. These skills are and will stay in great demand for a while, as most companies are still far from leveraging internal data and ML, to run more efficiently.

Hence, try to build your first ML system today. Feel the adrenaline rush, and make one solid step to become a top full-stack data scientist.

And let me know how it goes.

Happy learning!

Cheers

Pau

Hi Chirag,

If you wanna get hands-on implementing the 3-pipeline design using a real-world dataset and problem, I suggest you take a look at The Real-World ML Tutorial

https://realworldmachinelearning.carrd.co/

Cheers

Pau

Nice insights. It would be even better if we have a code example that implements this architecture on a small dataset.