Today I want to show you how to design a real time ML system that can predict crypto prices 5 minutes into the future, using

Python and Rust to build our micro-services.

Apache Kafka for real time data streaming

LLMs for sentiment extraction from unstructured data,

Kubernetes for a production-ready deployment

Let’s start!

Our goal 🎯

Let's build an ML system that can predict crypto prices 5 minutes into the future.

Attention 🚨

Predicting short-term crypto price movements is very hard.

If it wasn’t everyone would be rich. And, as far as I know, not everyone is rich.

I am not rich for example.

To predict this target we want to use ML, and for that we need reliable data sources that can help us generate the best predictions we can.

In this case we will use 2 real-time data streams

Crypto trade data

Market news about blockchain, or any economic factor that can possibly impact the target metric we want to predict.

System design 📐

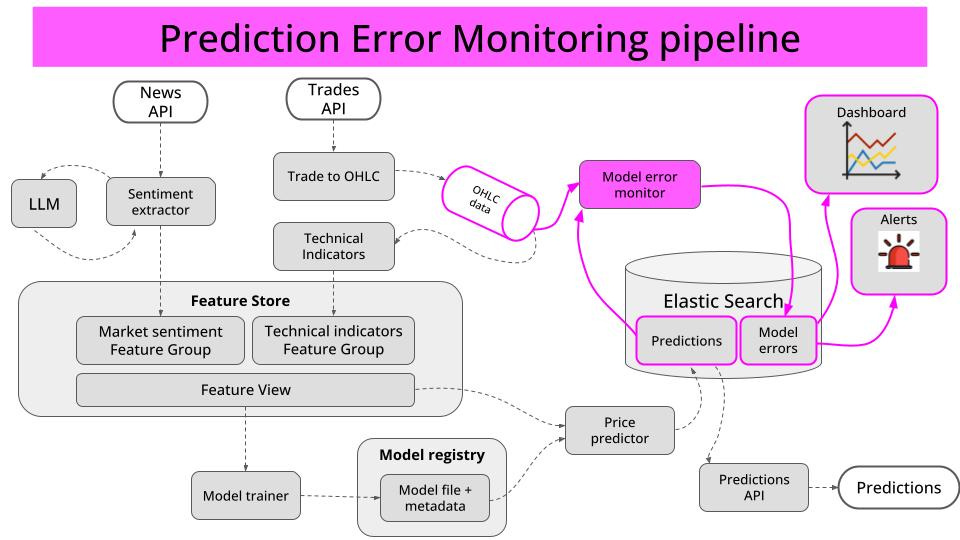

Let's break down our system into 4 types pipelines

Feature pipelines (2 of them)

Training pipeline

Inference pipeline

Monitoring pipeline

Let's go one by one.

1. Feature Pipelines 💾

Our 2 feature pipelines transform raw data into reusable ML model features, and save them in our Feature Store.

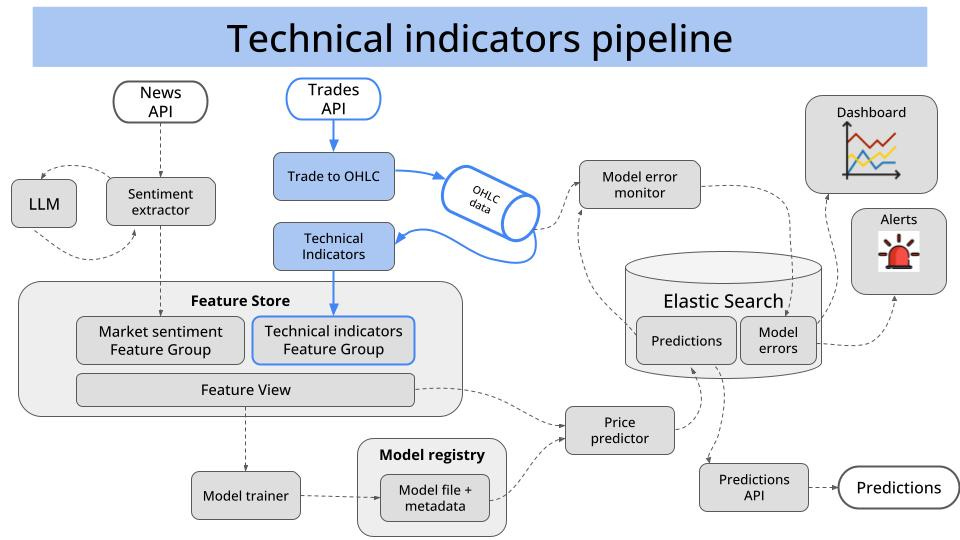

Feature pipeline for technical indicators

Ingests market trades from a websocket API like Kraken or Binance,

Aggregates them into 1-minute windows

Engineers technical indicators to capture momentum in the market, and

Pushes them to the Feature Store

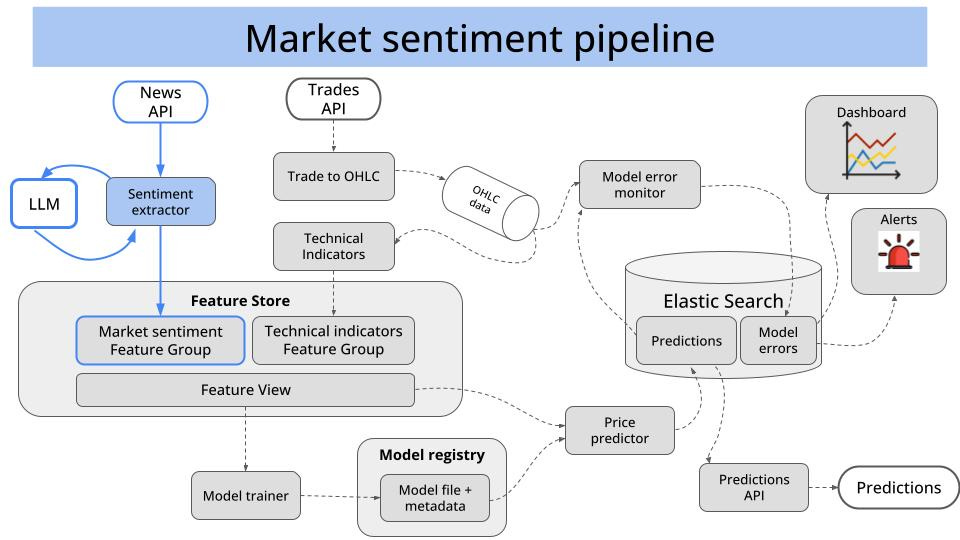

Feature pipeline for market sentiment

Scrapes news by pooling a website like coinbase,

Parses the raw text into a structure sentiment score using an LLM, and

Pushes them to the Feature Store

2. Training pipeline 🏋🏽

We can use a boosting tree model like XGBoost to uncover any patterns between the

current technical indicators, and

market senttiment

and

the price of BTC or ETH 5 minutes into the future (target metric)

The final model is pushed to the model registry, so it can be loaded by our next pipeline: the inference pipeline.

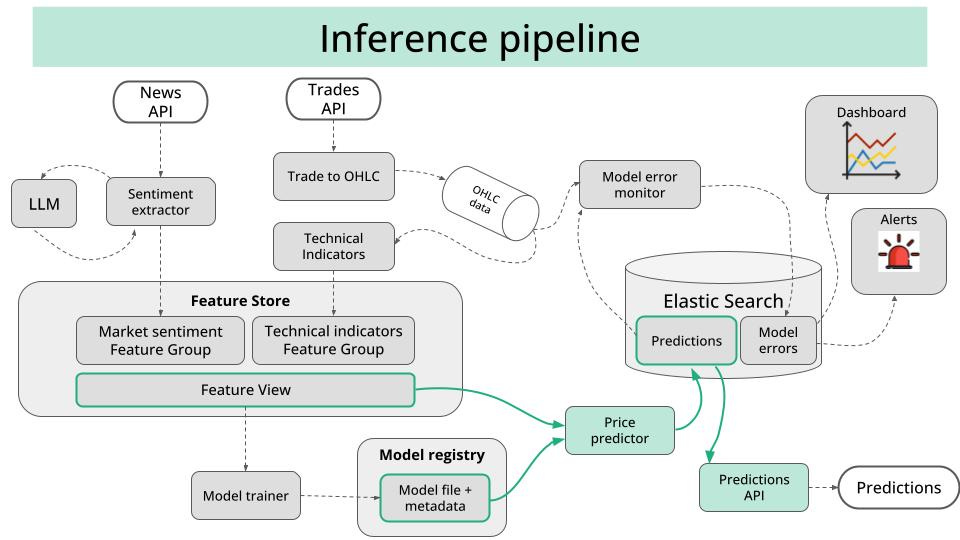

3. Inference pipeline 🔮

Finally, we need to generate and make fresh predictions accessible. This is what the inference pipeline does.

To serve the final predictions, while making them easily accessible to our next pipeline (the monitoring pipeline) I recommend you split this step into 2 services:

Prediction generator

Loads the model from the registry and

Continuously reads the latest features from the Feature Store,

Generate a new prediction and

Pushes this prediction into a DB, like an Elastic Search index.

Prediction API

This is a lightweight service that

Receives incoming requests from client apps,

Finds the predictions in the Elastic Search index, and

Returns the prediction to the client app.

Bonus 🎁

If latency is critical, you can build a websocket API, instead of a REST API, to avoid reading and writing to Elastic Search.

4. Monitoring pipeline 🔎

There are lots of things you can monitor here. The most fundamental one is the error of your predictions.

To monitor these you need to build a streaming service that

Listens to incoming price data

Loads the predictions from the DB

Computes the error and

Pushes the error into another Elastic Search index

These errors are plotted on a dashboard using Kibana, and trigger alerts to your Slack or Discord team.

BOOM!

Wanna build this system with me?

On December 2nd, 213 brave students and myself will start building this system, in my course Building a Real Time ML System. Together.

Live. You and me. Step by step.

It will take us at least 4 weeks, and 40 hours of live coding sessions, to go from idea to a fully working system, that we will deploy to Kubernetes.

Along the way you will learn

Universal MLOps design principles

Tons of Python tricks

Feature engineering in real time

LLMs engineer market signals from unstructured data

Some Rust magic

.. and more

Gift 🎁

As a subscriber to the Real World ML Newsletter you have exclusive access to a 40% discount. For a few hours you can access it at a special price.

Talk to you next week,

Wish you a great weekend,

Pau

This is brilliant 😊🙌

Love the modular nature of your approach, makes it’s much clearer to comprehend