RWML #035: From ML prototypes to ML products

Do you know how to train ML models but don’t know how to build complete ML apps?

This article is for you.

The gap between training models and building ML apps

So, what is the difference between training an ML model and building a full ML app? 🤔

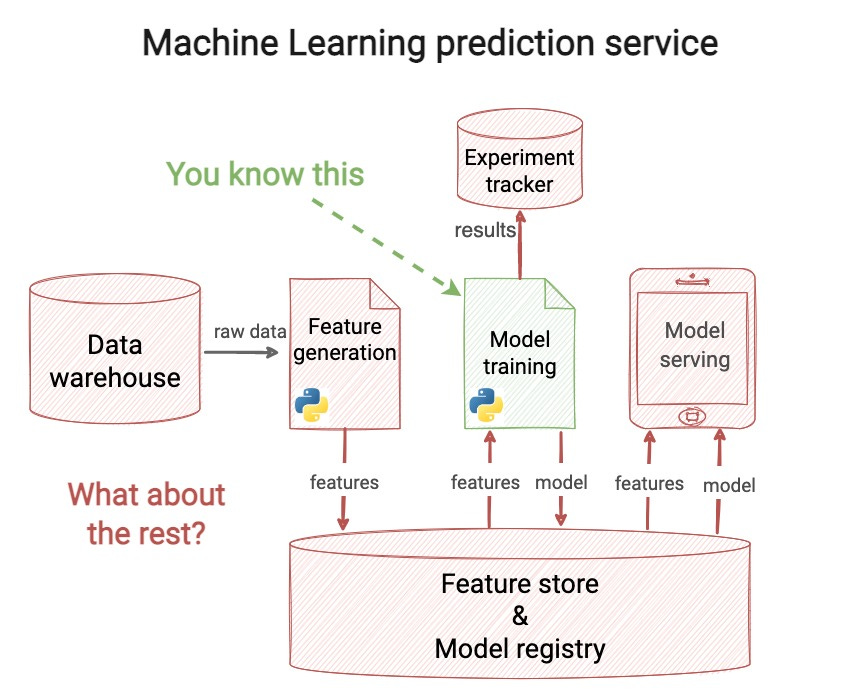

A Machine Learning service is a sequence of computation and storage steps, that

takes in raw data, and

outputs model predictions

that help a business make better/smarter decisions

And model training is JUST ONE of those steps.

While the model you've trained in Jupyter notebook IS important, you need to build the rest of the system to make it work.

How do you do that?

2 solutions

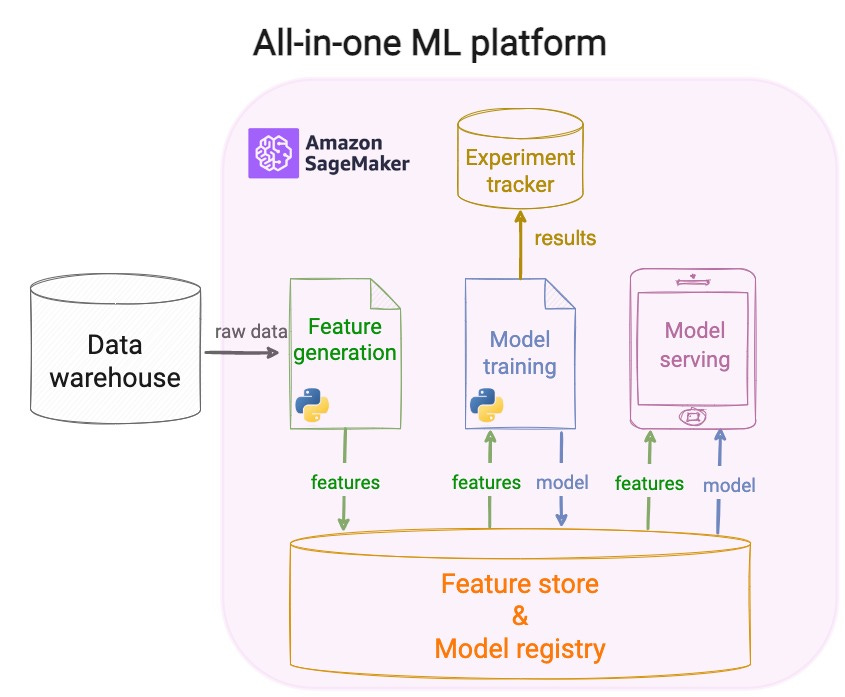

Solution 1 → All-in-one platforms

Cloud providers like AWS offer all-in-one ML solutions, like AWS Sagemaker.

In this case, your whole ML app is built inside their platform, using their managed services:

→ storing and serving features 📊

→ experiment tracking and model registry🧪

→ model training 🏋️♂️

→ model serving 💁

→ model monitoring 🔎

Pros and cons

✅ Low-cost and integrated solution for small to medium-sized teams

❌ Requires cloud expertise to operate.

❌ Vendor lock-in. Migrating your stack to another platform is painful because your business logic and infrastructure code are strongly coupled.

The question is

Is there an alternative to this “monolithic” approach?

Yes.

Say hello to the Serverless ML movement!

Solution 2 → Integrate best-of-breed MLOps tools (aka Serverless ML)

You choose best-of-breed single-purpose tools, for example:

→ Hopsworks as Feature Store 📊

→ CometML as Experiment tracker and Model registry🧪

→ Beam as Compute Engine to train and serve models 🏋️♂️

Serverless tools have Python-first APIs, meaning you only need

→ an API Key from the service you wanna integrate 🔑

→ pip install their Python SDK 🐍

And you are ready to go.

Pros and cons

✅ No time spent setting up and managing cloud infrastructure.

✅ Your business code logic and infrastructure logic are no longer coupled, so you can easily migrate part of your infra if you find a better product for your needs.

❌ Not the best option for large enterprises with established teams and cloud or on-premises infra.

My advice 💁

If you haven’t built an ML app before, I recommend you use a Serverless infrastructure.

It will help you understand the architecture and design of the system, and go beyond specific all-in-one products like AWS Sagemaker.

Join the Serverless ML Discord Community 🤗

A few months ago I started the Serverless ML Discord community, with the goal to help you and millions of ML engineers worldwide build better ML products.

→ Join today, connect with the community, and accelerate your learning.

It is time to BUILD.

See you there!

Pau