These past 4 weeks I have talked to several companies, who want to develop some sort of agentic platform.

They are all in the same situation.

They managed to build a Proof Of Concept, for their business case, using third-party services (like OpenAI API) and duck-taped Python scripts

For example

An agent demo to automate customer support workflows in a gaming company, built as a single Python Langgraph flow running on AWS Lambda.

What’s the problem

I have worked as an ML engineer for 10 years, and I have seen this hype-crash-back-to-basics several times.

10 years ago it was the Deep Learning hype, when companies of all sorts (including the one I was working back then) thought that Deep Learning for Computer Vision was all about training neural networks from scratch, without thinking of deployment and operationalisation (aka fancy ML without Ops).

Needless to say, all these projects ended up badly (including the one I was working xD).

And the thing is, I see a similar trend these days with LLM engineering and LLMOps.

Companies know how to build LLM demos, but they don’t know how to scale and operate them. So their toys never see the light of production.

Either because they are

too slow

too expensive

too hard to trust,

or all of these reasons together.

Today I want to share my 2 cents to help you go from LLM toys to LLM products that move the needle for your company.

An LLMOps blueprint

Agentic platforms are no dark magic.

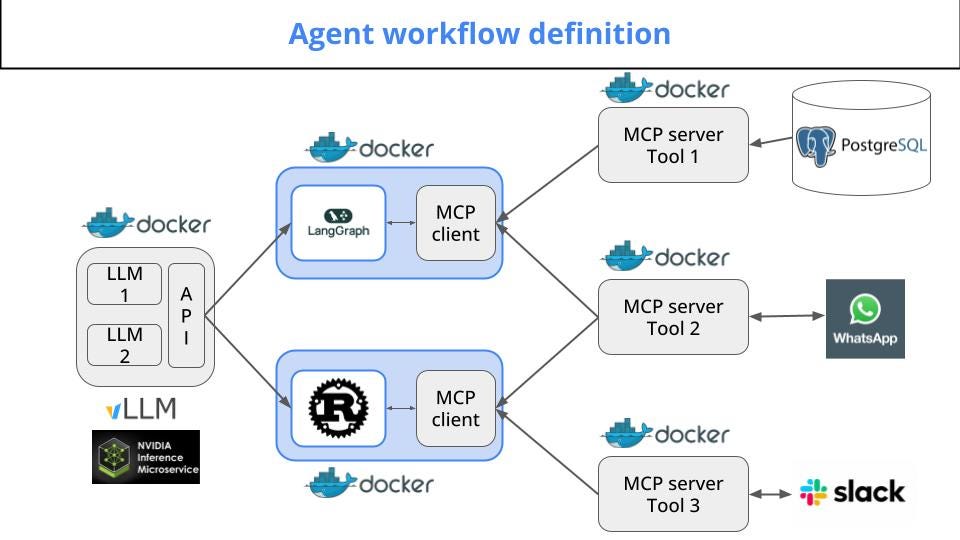

They are just a bunch of applications running as containerised services inside a compute platform.

Let’s go one by one:

Compute platform

When you are a small company, you can start with serverless compute like AWS Lambda or GCP Cloud Functions. However, as you start to grow, and token volumes increase in your agentic workflows, your cloud bills will start to grow. A LOT.

So, unless you are willing to burn cash, I recommend you get into the Kubernetes train.

Your cost curve will flatten, and you will increase return of investment.

My advice

The Kubernetes learning curve is steep, especially at the beginning. However, when you overcome this first shock, a whole new world of possibilities opens in front of your eyes.

Agent workflow logic

This is typically a Python script written using libraries like

Langchain

Pydantic AI

Langgraph

Llamaindex

or, even better, using a Rust alternative like Rig.

My tip

Rust is a compiled language, that produces very small binaries, that translate into super slim containers running in your cluster. This means you can run 10-50x more agents in Rust than in Python, using the same infrastructure. So you build safer, faster and cheaper agents.

LLM servers

LLM servers, that provide text completions used by the agent workflows to reason, and to output responses.

Tool servers

Tool servers, that acts as gateways between the agents and the external services theses agents invoke to accomplish their tasks

And the thing is, with the emergence of standards for

Agent-tool interaction, like Model Context Protocol introduced by Anthropic

Agent-to-Agent interaction, like the newly proposed Agent2Agent protocol by Google.

this modularisation will take us to yet-another era of microservices architectures.

In this case, micro-agent architectures.

Which means that, if you and your company want to make the most out of it, you need to go back to good-old software engineering and DevOps best practices.

In a nutshell

IMHO this is the design that unlocks the door to Agentic Architectures that help you either

make more money for your business, or

spend less money in your business.

Wanna learn LLMOps that work in the Real World?

Marius Rugan and I are preparing a hands-on course on LLMOps.

No more toys.

No more demos.

Just the things we learn every day while working for our clients, building LLM and agent-based systems.

Which means, we will share you all the tips and tricks we discover at work, building production-ready LLM solutions.

Hope this helps,

Enjoy the weekend,

And talk to you next week

Pau

😱😱😱can’t wait

Can’t wait!